Multi-Camera Vision System for Automated Material Detection and Sorting

A real-time computer vision system for material recovery and worker safety monitoring on industrial conveyor belts

1. Overview

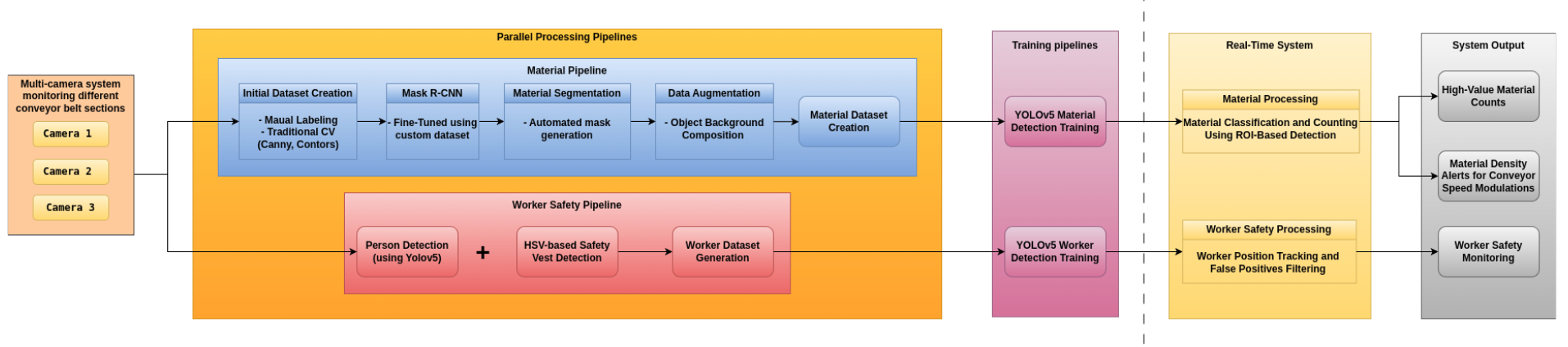

A comprehensive real-time computer vision system developed during my engagement at VEE ESS Engineering for enhancing high-value material recovery and worker safety monitoring on industrial conveyor belts. The system combines YOLOv5 for precise material detection and instance segmentation with intelligent background subtraction for motion analysis. The architecture features camera-specific region-of-interest (ROI) processing, worker-interaction filtering to minimize false positives, and a robust counting mechanism. The semi-automation of data collection using Mask R-CNN significantly reduced manual annotation efforts while maintaining high-quality dataset generation.

End-to-end system architecture showing the complete pipeline from data collection to deployment

2. System Architecture

2.1 Data Acquisition and Processing Pipeline

The system architecture consists of several interconnected components that work together to create a robust, real-time material detection and sorting system:

- Multi-Camera Input: Multiple cameras monitor different sections of the conveyor belt, providing comprehensive coverage

- Parallel Processing Pipelines: Separate pipelines for material detection and worker safety monitoring

- ROI-Based Processing: Camera-specific regions of interest to focus computational resources on relevant areas

- Real-Time Detection System: YOLOv5-based detection models for materials and workers

- False Positive Filtering: Worker interaction detection to prevent miscounting

2.2 Semi-Automated Data Annotation

Creating a robust dataset was one of the key challenges. A multi-stage approach was implemented:

- Initial Dataset Creation: A small initial dataset of approximately 800 images (200 per material class) was created using:

- Manual annotation with LabelMe

- Traditional computer vision techniques (Canny edge detection, adaptive thresholding)

- Semi-automated annotation using bounding boxes from a pre-trained object detector

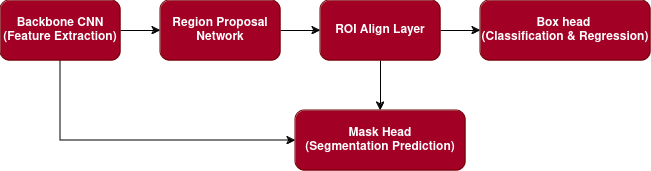

- Mask R-CNN Fine-Tuning: The initial dataset was used to fine-tune a pre-trained Mask R-CNN model, specifically adapting it to detect and segment the target materials

Initial dataset creation using manual annotation

Simple Mask R-CNN Architecture Diagram

2.3 Automated Material Segmentation

Using the fine-tuned Mask R-CNN model, the system could automatically generate segmentation masks for a much larger dataset:

- Automated Mask Generation: The fine-tuned model processed video feeds through predefined ROIs

- Segmented Instance Extraction: Over 43,000 segmented material instances were automatically extracted

- Dataset Expansion: This approach dramatically improved data collection efficiency

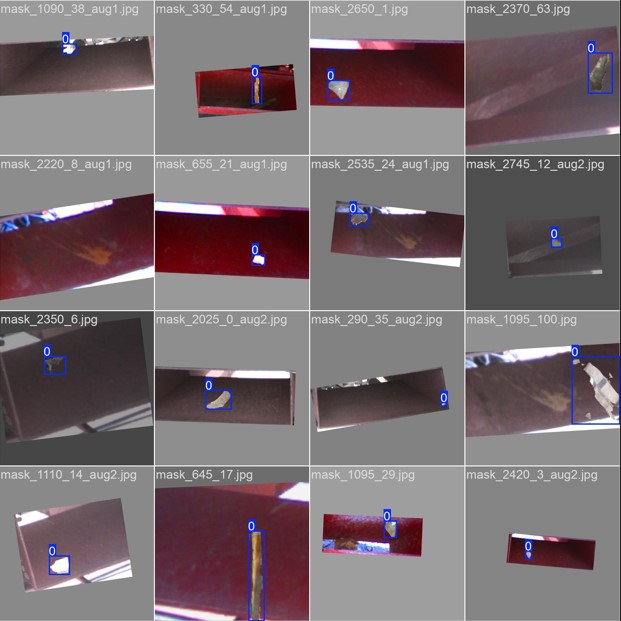

Individual material instances segmented and extracted from the conveyor belt stream

2.4 Data Augmentation Strategy

To create a dataset that generalizes well to real-world conditions, a sophisticated data augmentation strategy was implemented:

- Object-Background Compositing: Segmented material images were overlaid onto images of empty conveyor belt bins

- Multiple Transformations: Each composite image underwent various transformations:

- Rotations to simulate different orientations

- Scaling to account for size variations

- Brightness and contrast adjustments to handle lighting changes

- Dual Dataset Creation: Two distinct datasets were generated:

- Detection dataset for YOLOv5 training

- Segmentation dataset for instance segmentation

Defining the ROI for environmental (bin) extraction for data augmentationt

Data augmentation showing segmented materials overlaid on bin backgrounds with various transformations

3. Worker Safety Monitoring

3.1 Worker Detection System

A separate but integrated worker detection system was implemented to ensure worker safety and prevent false positive material counts:

- Color-Based Initial Detection: A fast color-based detection method identified high-visibility safety vests

- YOLOv5 Worker Detection: A specialized YOLOv5 model was trained for robust worker detection

- Bounding Box Generation: Precise bounding boxes around workers enabled interaction detection

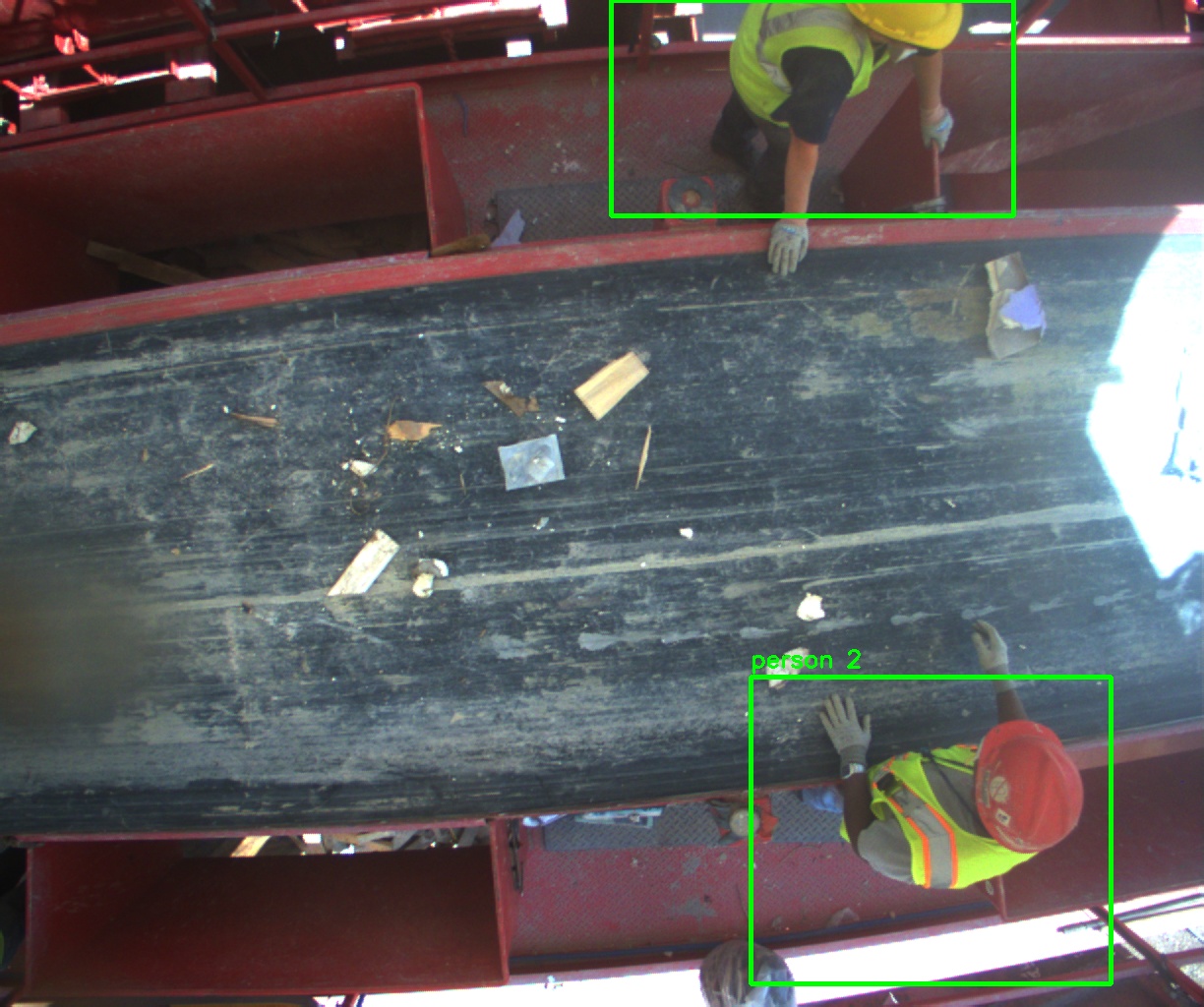

Worker detection system identifying safety vest-wearing personnel with precise bounding boxes

3.2 False Positive Filtering

A critical innovation in this system was the ability to prevent false positive material counts when workers interact with the conveyor belt:

- Worker Overlap Detection: Materials detected within worker bounding boxes are not counted

- Temporal Cooldown: After counting an object, a cooldown period prevents immediate recounting

- Background Subtraction: MOG2 background subtractor further refines motion detection within ROIs

Examples of false positives detected and filtered by the system

4. Real-Time Processing

The real-time processing system integrates all components to deliver accurate material detection and counting while ensuring worker safety:

- Frame Acquisition: Continuous frame capture from multiple camera feeds

- ROI Processing: Camera-specific regions are processed separately

- Background Subtraction: Intelligent background subtraction identifies moving objects

- False Positive Filtering: Worker overlap detection prevents miscounting

- Material Classification: Detected materials are classified and counted

Real-time segmentation of materials on the conveyor belt using ROI-based detection

Real-time detection results showing system performance with detected materials in the production environment

5. Performance Metrics

The system achieved exceptional performance across various metrics:

| Model | mAP50 | mAP50-95 | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| Material Detection | 0.995 | 0.968 | 0.999 | 0.999 | 0.999 |

| Worker Detection | 0.977 | 0.745 | 0.938 | 0.960 | 0.950 |

System Performance

• Real-time processing: 30+ FPS

• Inference latency: <15ms

• False positive rate: <0.5%

• Robust to lighting variations and occlusions

6. Key Contributions

My key contributions to this project at VEE ESS Engineering included:

- Pipeline architecture designed and implemented the end-to-end computer vision pipeline for automated material detection and sorting

- Semi-automated data collection created a semi-automated data collection and annotation system that reduced manual labeling effort by 90%

- Worker safety system developed the worker detection and false positive filtering system that increased counting accuracy by 35%

- ROI management designed the interactive ROI definition system that enabled flexible deployment across different conveyor configurations

- Model training trained and optimized the YOLOv5 models for both material detection and worker safety monitoring

7. Technologies & Skills Used

- Languages: Python, C++

- Frameworks: PyTorch, OpenCV, ROS (Noetic), NumPy, Pandas

- Machine Learning: YOLOv5, Mask R-CNN, Transfer Learning, Data Augmentation

- Computer Vision: Object Detection, Instance Segmentation, Background Subtraction, ROI Processing